Experimenting with Neural Radiance Fields (NeRF)

Neural volume rendering has sparked a lot of attention over the past year and therefore decided to venture into this exciting area over the weekend.

Thanks to the authors of NeRF (reference to paper provided at the end of the post) and the accompanying implementation made available in the following repo, I was able to optimize NeRF for 300k iterations on the fern dataset in about 21hrs on my RTX 3070.

Note that the conda environment setup provided by the authors didn’t quite work for me since it depends on Tensorflow v1.15 w/ CUDA 10.

In order to get this running on my setup, I built my own docker image that comes with Tensorflow v2.41 w/ CUDA 11 and made some minor updates to the code to get it going. For those interested, you can find the updates that I have made at this fork. You can also pull the docker container directly by

docker pull dennislui/nerf-241

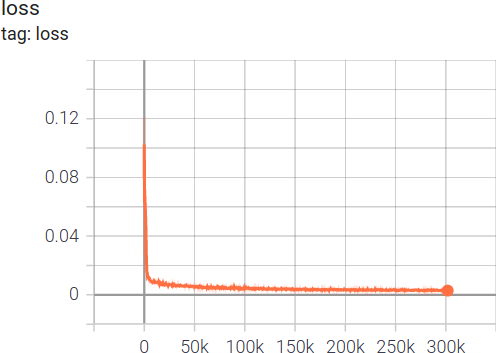

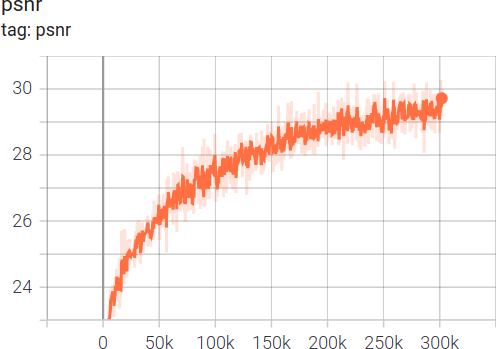

The following are the respective plots showing the loss and peak-signal-to-noise ratio (PSNR) over the 300k iterations

Now, compare the output of the optimized low-res NeRF at 50k and 300k iterations for both RGB and disparity outputs:

References

NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis

European Conference on Computer Vision, 2020